Artificial Intelligence: A Perspective

Artificial Intelligence: A Perspective Guest Article

Pramode

Verma*

*Professor Emeritus of Electrical and

Computer Engineering, The University of Oklahoma, pverma@ou.edu

[see

Author Capsule Bio below]

“Pre-publication article copy", to appear in the December 2023 edition of the Journal of Critical Infrastructure Policy.

Critical analyses of the potential of AI are showing up everywhere at an increasingly rapid rate. Scientists and technologists are working hard to continuously enhance the potential of AI to match, possibly exceed, the capabilities normally attributed to humans. Social scientists, on the other hand, are struggling to develop adequate safety mechanisms that will limit the damage AI can inflict on unsuspecting individuals or organizations. The euphoria to push the bounds of AI to higher levels in the servitude of mankind appears to have suddenly given way to the fear of a dark outcome looming on the horizon.

Human intelligence aided by machines when put into practice has consistently benefited mankind. Is AI a tipping point where machine intellect might be too good for humanity? This letter attempts to address ways in which AI can inflict damages and proposes how the damages can be contained, if not eliminated.

Artificial Intelligence is by no means a new endeavor. Dating back to the 1950s, AI researchers have tried to create machines that could mimic or surpass the capabilities of humans. Until most recently, AI was viewed as offering a human-machine continuum with a flexible boundary. The flexibility depended on needs of the task and the human element responsible for the task.

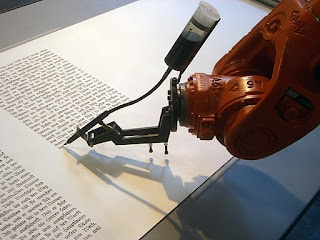

The generative properties of AI have challenged this notion. Generative AI can draw pictures, write a book, compose a poem, or generate text with little guidance. Generative AI is based on patterns and similarities in the underlying data and the relationship to its label or descriptor. Machine learning continuously enhances the depth and scope of such relationships by mining the massive amounts of data that Generative AI has access to along with the contextual application of relationships.

AI

algorithms are driven by techniques used by neural networks. The algorithms use

autocorrelation techniques choosing the most likely word, phrase, or icon based

on the statistical analysis of hundreds of gigabytes of Internet text. The

repertoire of information is growing at an exponential rate thus enhancing the

capabilities of AI. In many instances, the outcomes delivered by Generative AI,

have surprised the developers, and surpassed their expectations.

Can AI replace human beings in their entirety? This remains an open question because humans also display consciousness, feelings, emotions, and sensations. Some of these attributes arise because of the intricate relationship between mind and body. Furthermore, the sensory mechanisms of the human body are complex and distributed and include such attributes as touch, taste, and smell. While it’s possible to build mechanisms to mimic these features in a robot, possibly in a limited way, the potential benefits of such an undertaking are questionable from a commercial perspective. Artificial Intelligence applications in the day-to-day businesses are the major drivers of the science and technology of AI currently.

How should one regulate AI? Given the fact that AI is driven by algorithms and processing capabilities along with access to massive amounts of data, the innate growth of AI is unstoppable. We posit that regulation of AI should be looked upon from the perspective of those who it has the potential to inflict damage on. In other words, rights of the recipients who AI can hurt should drive the AI regulation.

The ability of AI to create misinformation is potentially its most damaging outcome. Unfortunately, no one can control the ability to generate misinformation any more than one can control the thought process associated with human beings. Once generated, misinformation can be delivered through the Internet on a person-to-person basis or through a broadcast medium. Another compelling example is misinformation generated by a database, such as a medical database, which is delivered to an inquiring customer over the Internet. The availability of instantaneous transport of misinformation worldwide adds to the gravity of the situation.

Let’s first consider misinformation delivered over the Internet. The most important part is to positively identify the sender at the behest of the receiving entity. This can be accomplished through a variety of means. One possibility is the use of public and private keys. However, the computational overhead consumed in such a scheme will likely make it unattractive to most consumers.

An alternative is to require every ISP to positively identify its customer. The receiving entity in such a network should have the choice to require the network to forward information from only those entities whose vetted credentials are forwarded along with the message. This contrivance should not violate the freedom of speech or expression; it merely empowers the receiving entity to limit access to it by an unknown or an unapproved entity. And it’s no more limiting than locking the door of your home to strangers.

The positive identification of a client by the entity to which the client has a relationship is prevalent among many institutions. In financial institutions, it’s known as the Know Your Customer (KYC) requirement. The implementation of forwarding the sender’s identity to a discerning receiver should not be difficult. Any digital device connected to the Internet can be uniquely identified by the network through its Layer 2 address. This can be supplemented by the Layer 3 address (such as a password) that can uniquely identify an individual, a process, or an institution. Indeed, this positive identification is already implemented by financial institutions. This identification can be forwarded to a discerning end point.

Let us now take up the case of broadcast media. Such media are, in most countries, regulated. The regulation can easily require that that any content the medium broadcasts has been vetted for the authenticity of the content originating entity. This can be displayed as part of the broadcast.

The third element that requires regulation is access to publicly available databases using Artificial Intelligence. One leading example is a health-related database that is publicly available. Such databases should be vetted by the concerned regulatory bodies or public institutions at large. A database, for example, answering queries related to health should be measured and rated for the level of accuracy it delivers in its response by the medical establishment of the country.

In some ways, human intelligence has grown too fast for the good of humanity. Every time technology has enhanced our capabilities to produce goods at a level unimaginable with human labor alone, there have been collateral damages.

Like any new technology that comes into practice, AI will cause collateral damage. There will be job losses especially for the most vulnerable members of the society, e.g., those who cannot easily adapt to changed modes of operation such as the elderly or those disadvantaged in other ways. Since AI is so heavily dependent on digital literacy, countries that are less literate in technology will be left further behind. And increased resource gaps between and among nations is not a good option for humanity as a whole.

Undoubtedly, AI will be growing closer and closer to the human brain, even possibly surpassing it. Arguably, it already has. As to whether it reaches the human mind which shows consciousness, instincts, and feelings, we do not know currently.

Author Capsule Bio

Pramode Verma is Professor Emeritus of Electrical and Computer Engineering at the Gallogly College of Engineering of the University of Oklahoma. Prior to that he was Professor, Williams Chair in Telecommunications Networking, and Director of the Telecommunications Engineering Program. He joined the University of Oklahoma in 1999 as the founder-director of a graduate program in Telecommunications Engineering. He is the author/co-author of over 150 journal articles and conference papers, and several books in telecommunications engineering. His academic credentials include a doctorate in electrical engineering from Concordia University in Montreal, and an MBA from the Wharton School of the University of Pennsylvania. Dr. Verma has been a keynote speaker at several international conferences. He received the University of Oklahoma-Tulsa President’s Leadership Award for Excellence in Research and Development in 2009. He is a Senior Member of the IEEE and a Senior Fellow of The Information and Telecommunication Education and Research Association. Prior to joining the University of Oklahoma, over a period of twenty-five years, he held a variety of professional, managerial and leadership positions in the telecommunications industry, most notably at AT&T Bell Laboratories and Lucent Technologies. He is the co-inventor of twelve patents.